AI-powered coding tools are advancing quickly—and so are the ways teams are adopting them. Engineering leaders within our portfolio have been gathering to compare notes, share what’s working, and help each other accelerate adoption of AI coding assistants and other tools.

Here are some of the takeaways from our first few AI Coding Assistant Roundtables. Keep in mind that new ideas, lessons, and tools are emerging at rapid pace, so we encourage companies to lean in, make time for AI experiments, and stay nimble and keep the momentum moving. The biggest mistake you can make with AI is not getting started right away.

(This article was originally posted in July 2025 and reflects our experiences with AI assisted coding to date. If you want to explore further how we help companies with AI, visit Mainsail AI Labs.)

🧰 Tool Adoption Snapshot

Most Used Tools:

A survey of our portfolio companies revealed that nearly half are currently using Cursor, with GitHub Copilot a close second. A few others are using JetBrains AI and Claude Code.

Some more quick points about our group’s preferences:

- Cursor has been widely adopted and the current front runner due to its model access, usage tracking, and newly introduced agent ability.

- GitHub Copilot is often used when teams are already using VS Code or Visual Studio and do not want to switch.

- Claude Code is widely used for long-form coding tasks and POC generation. The group has noted that deep, detailed prompting (e.g., multi-page API specs) produces better results with Claude and similar tools.

Common Barriers:

Tools like Windsurf lack SSO/admin controls. Cursor’s VS Code dependency limits adoption in JetBrains shops. Copilot had lagged behind—but recent improvements have put it back in the running.

What about ChatGPT (or other general purpose LLM tools)?

Many engineers still rely on ChatGPT, Gemini, Claude, etc. for quick tasks, even when embedded tools like GitHub Copilot are available. These remain favored for ad hoc scripting, SQL query debugging and optimization, and administrative tasks like policy drafting.

Key Takeaway:

Don’t get stuck. The landscape is shifting fast—what wasn’t viable two months ago might be high-performing today.

Model Play:

Regardless of the tool, test different models. According to the engineering leaders who attended our Roundtable sessions, Sonnet 3.7 continues to lead, but Gemini 2.5 is gaining ground in their opinions for certain tasks.

✅ What’s Working

Rules & Prompts:

Cursor rules and GitHub coding guidelines are elevating consistency in style, tone, and structure and help control how the models generate code. Consider using these heavily across the team to drive compliance with team standards.

Domain Injection:

Injecting schema files, PRDs, or custom rules give AI context—and can drastically improve code quality.

Cross-Functional Use Beyond Engineers:

AI assistants are boosting output in SRE, QA, and Data teams too—from infrastructure scripting to test generation. AI use by designers (e.g., analyzing user surveys and building Figma designs) show early traction. Product teams are also beginning to explore workflows that integrate AI, though widespread adoption remains inconsistent.

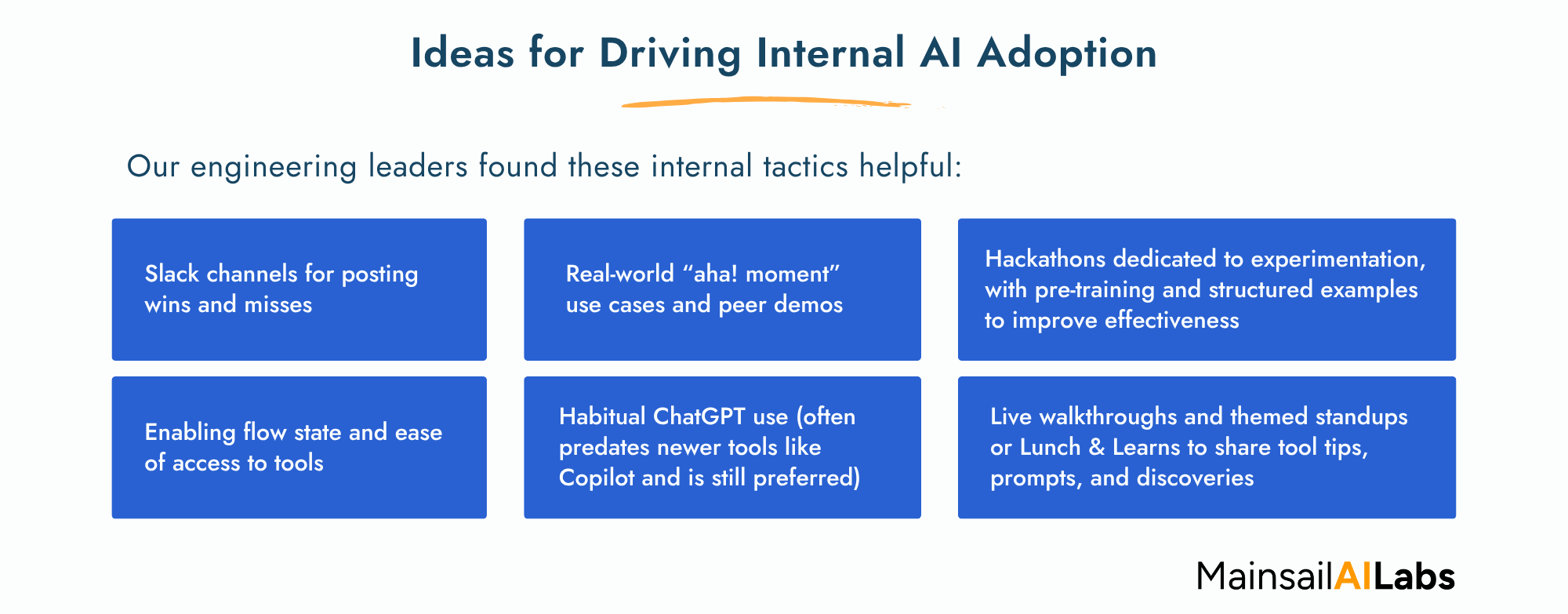

Cultural Wins:

Framing AI as a career accelerator, not a replacement, and providing hands-on training are crucial for team buy-in.

🚧 What’s Not Working

Vendor Lock-In:

IDE-specific tools (like Cursor with VS Code) create friction for teams on JetBrains or other platforms.

Language Gaps:

Tools are still inconsistent with legacy codebases, particularly older Java or .NET environments.

Limited context windows of LLMs:

Some of our companies are addressing this by using commit messages or summarized markdown memory files to retain continuity. Claude Code’s ability to ingest summaries to preserve workflow memory was highlighted as one solution. The consensus was that manually maintaining context summaries is currently the best workaround.

DevOps and Infrastructure-as-Code Challenges:

DevOps teams have generally shown slower adoption. YAML/JSON confusion between frameworks like CloudFormation and SAM introduces AI-generated syntax errors, yet one-off CLI scripts using LLMs are still valuable for DevOps tasks.

Quality Assurance (QA) and CI/CD Limitations:

QA teams lag behind engineering in AI adoption. Tools like Katalon offer some AI support but lack full automation, and solutions like QA Tech offer partial automation with human review still required. QA’s future may lie in balancing exploratory AI-generated tests with human curation.

Mobile Development:

Mobile (iOS/Android) AI tooling is still underwhelming and no one has found anything that really excels.

🧪 Emerging AI Coding Practices

Architecting for AI:

Moving to mono-repos or tighter package boundaries helps AI tools reason better and faster as it gains access to the fuller context of the system.

Prompting for Performance:

Prompting models to optimize not just for readability—but also for speed, especially with complex queries. At the same time, while AI-generated code tends to be more verbose, the verbosity is surprisingly being found to improve long-term maintainability and readability, even if it’s less elegant than hand-written code.

AI Agents:

GitHub Copilot’s new review assistant and the various flavors of autonomous coding agents are showing early signs of traction and are helping with smaller tasks. Also, ensuring agent focus on scoped JIRA tickets has been seen to prevent runaway compute and unwanted refactors.

Our portfolio is also finding that greenfield projects benefit more from AI agents due to clean structure, whereas legacy systems often overwhelm context windows and require “headlight” work to focus agents. The use of exploratory agents to identify relevant files and pass tasks to scoped agents was a suggested tactic.

Metrics and ROI:

While some of these tools provide details around usage, it is generally just around code initially written by AI or accepted by the developer. The quantity of code written by AI in the end product is not reported on, which presents a skewed view of actual usage. Several tactics to measure usage were discussed among our group of engineering leaders, but in the end, the amount of AI code that makes it into the end product is still a difficult metric to capture accurately.

Final Takeaway: Keep Calm and Share On

As a leader, you must demonstrate the commitment to AI by prioritizing and using it for yourself, creating the internal conditions for discovery, sharing technical insights like these within your team, and celebrating AI use across your company.

Here are more of my thoughts on Leading an AI-First Company. I hope we get the chance to discuss your AI transformation, from culture all the way to code and customer impact.